An AI app store focused on UX and DX

BuildAI apps

Built by developers for developers

Let's solve together the pain points of developing and using AI apps

Our mission is to help developers build sustainable AI apps, agents and tools with improved UX for everyone

The high cost of AI inference makes it impossible for indie devs to build free/inexpensive apps. Why don't we just allow each user to pay for exactly what they consume on every app without the friction of paywalls and subscriptions?

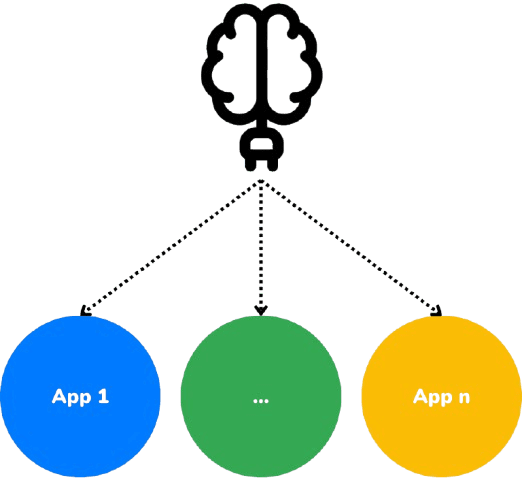

We solve this with portable accounts that can be connected to every app with a single click. We call these accounts 'brains'.

How it Works

Each user has a portable brain that they can link to the apps with a single-click.

The brain provides your app with specific features to help you develop and monetize without paywalls. Conversion increases as users do not need to signup and add payment details to every app separatedly.

Forget about inference costs.

Users can topup their brains and spend credits across all apps. Users' brains automatically cover inference costs on your app, so you don't have to worry about them.

Frictionless sign-up without forms

Brains are linked to your app with a single click, providing you with the user information and eliminating the need for extra sign up forms.

Monetize your app without paywalls.

Users are tired of subscriptions. You can set a markup on user inference spent and/or charge per run. Your app monetizes automatically through the user's brain, eliminating the need for extra paywalls.

Leverage an effective distribution channel

Benefit from the large user base we generate together, just one click away from becoming users of your app as well.

Unified LLM API

Use a growing set of 150+ AI models through a single OpenAI compatible API.

Frequently Asked Questions

Take a look to the most common questions we receive.

How to integrate

Show the brainlink connect button

Create a button or link pointing to the brainlink connection page. Or use our embeddable button. When clicked, the user will be prompted to accept the connection.

Obtain the user access token

Once the user accepts the connection it will be redirected back to your application. You can then get the user access token to perform inference calls on behalf of the user.

Use the access token on your inference requests

Use the user access token to make inference requests to the model of your choice on behalf of the user. You can use any AI SDK by configuring the baseUrl (BrainLink is OpenAI compatible) or direct REST API calls.

BrainLink

BrainLink